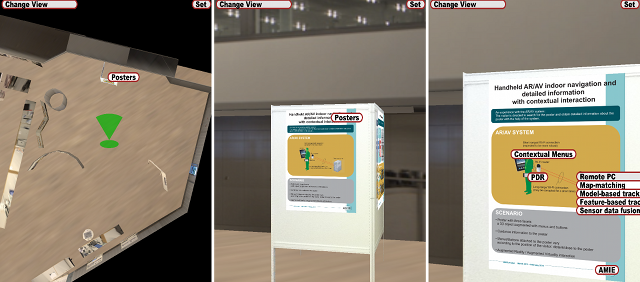

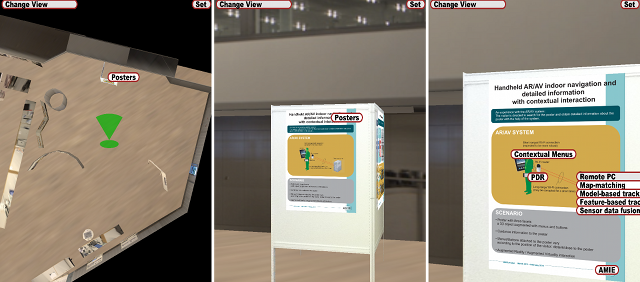

The demonstration shows a handheld system for indoor navigation to a specific exhibit item followed by detailed information about the exhibit with contextual Augmented Virtuality interaction. The system provides the following key functions:

Any participant can experience the Augmented Virtuality system, by being directed to search for a target exhibit to obtain further detailed information about the exhibit. What makes our demonstration unique is the integration of indoor navigation capabilities with interactive Augmented Virtuality functionalities for augmenting an exhibit.

This work has been presented during the ISMAR 2011 demonstrations. It has been realised in collaboration with AIST as part of the AMIE project.

[Video on YouTube AIST channel] [Posters]

Masakatsu Kourogi1, Koji Makita1, Thomas Vincent2, Sébastien Pelurson2, Takashi Okuma1, Jun Nishida1, Tomoya Ishikawa1, Laurence Nigay2, Takeshi Kurata1, 3.

1 AIST, 2 University Joseph Fourier Grenoble 1, 3 University of Tsukuba

This demo shows a handheld AR/AV (Augmented Virtuality) system for indoor navigation to destinations and displaying detailed instructions of target objects with contextual interaction. A localization method of the system is based on two crucial functions, PDR (Pedestrian Dead Reckoning) localization, and image based localization. The main feature of the demo is a complementary use of PDR and image based method with virtualized reality models. PDR is realized with the built-in sensors (3-axis accelerometers, gyroscopes and magnetometers) in waist-mounted device for estimating position and direction on 2D map. Accuracy of the PDR localization is improved with map matching and image based localization. Maps of the environment for map matching are automatically created with virtualized reality models. Image based localization is realized with matching phase and tracking phase for estimating 6-DoF (degree of freedom) extrinsic camera parameters. In matching phase, correspondence between reference images included in virtualized reality models and images from the camera of the handheld device is used. An output of the PDR localization is used for an efficient searching of reference images. In tracking phase, interest point-tracking on images from the camera is used for relative motion estimation.

This work has been presented during the ISMAR 2012 demonstrations. It has been realised in collaboration with AIST as part of the AMIE project.

[Video on YouTube AIST channel]

Koji Makita1, Masakatsu Kourogi1, Thomas Vincent2, Takashi Okuma1, Jun Nishida1, Tomoya Ishikawa1, Laurence Nigay2, Takeshi Kurata1, 3.

1 AIST, 2 University Joseph Fourier Grenoble 1, 3 University of Tsukuba